This article is part of our body language guide. Click here for more.

Few things are more hyped than the coming AI revolution. Billionaires fight over whether AI is going to save us or damn us, and much of the future promise of AI is still years out. But when it comes to AI’s application to body language — the future is already here. It’s just not widely distributed yet. In this post we explore how you already can take advantage of some recent AI advancements, as well as ways in which AI already is being put to work out in the world.

Note: Unfamiliar with AI? Stanford offers a great primer here.

How Computers Read Body Language

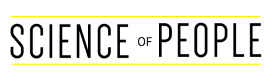

The short answer? There isn’t a short answer. Getting computers to understand human body language is no simple task. Let’s break down the steps required for computers to get a good understanding of what your body language is saying. These screenshots are grabbed from wrnch’s presentation at NVIDIA’s conference from 2018.

Key Point Detection

First, the computer needs to see. So, it needs a camera. That is simple enough — there are more cameras on Earth than people. But a computer doesn’t see like we do, it only gets a stream of data from the camera. So, software first has to recognize the shape of a human body, and then map out the key points of our anatomy.

Some poor souls had to teach a computer what a knee looks like, and a shoulder, and an elbow, and all of our different body parts, in lots of different lighting conditions, and lots of different body types.

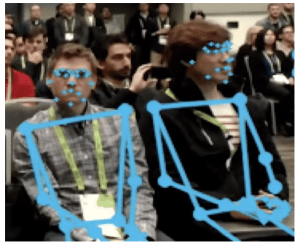

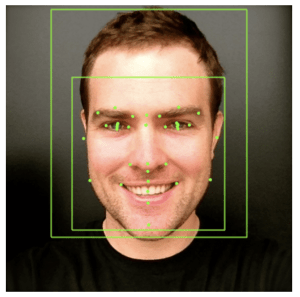

Then they had to do the same for the face. You can see how Google’s AI mapped my face on the image below.

Why is this important? Well, because this lets a computer turn something from the physical world into math. Here’s sample data returned from my picture above:

{

"faceAnnotations": [

"landmarks": [

{

"type": "LEFT_EYE",

"position": {

"x": 170.85728,

"y": 183.1824,

"z": 0.0037799156

}

},

{

"type": "RIGHT_EYE",

"position": {

"x": 263.8876,

"y": 182.71136,

"z": -3.4158726

}

},

{

"type": "LEFT_OF_LEFT_EYEBROW",

"position": {

"x": 141.12056,

"y": 164.7338,

"z": 5.355021

Computers love math. They understand it. They need it. So, now the computer has a map of each part of our body that it can understand.

Key Points Together Make a Body

But understanding that an image contains an elbow, a knee, or a face doesn’t do much good. So, then the computer takes a look at all these points together and, taking what it knows about how a body should be put together, connects the dots. You can see a visual representation of this below.

One particular thing to notice here. See that in the image below there are extra dots (or coordinates the computer pays attention to) in two areas…hands and faces. We know we communicate a huge amount of information via facial expressions and hand gestures. So, the computer is looking even closer at those two areas so it can “get the full picture,” as it were.

Now We Infer Emotions from that Shape

So, now the computer knows how to translate an image from a camera to the shape our body, or multiple bodies. It’s paying close attention to our faces and our hands. Now comes the easy part — well, for humans anyway. Ascribing emotions or intent to body language and facial expressions is literally in our genes, so it comes pretty easily.

But computers have no clue! This is where machine learning models come in to play. Put simply, computers need to see a ton of examples of any given kind of body language, then to be told what that body language is or means, and then they can, over time, and with more and more examples, identify that same or similar stance with that emotion.

The video below, again from wrnch, does a great job of illustrating this principle. In the video, you can see different physical expressions being exhibited. Then when the software recognizes them as a gesture, it replaces the part of the body making that gesture with the equivalent emoji.

Infer Activities from Context

This is where the real magic happens. Understanding gestures is one (very impressive) achievement. But acting on them takes an additional layer of information to be viable: situational context. Take the examples above. People are waving, or showing “Stop!” But how do I, as the computer, act on that information? It depends.

If I’m a self-driving car approaching a crosswalk and I see an upheld hand in front of me, I can infer the human wants me to stop as they intend to cross the street. If I instead see that same human using the “move along” gesture, that’s a strong indication they would prefer for me to continue on my journey instead of wait. A car just has read your body language, welcome to the future!

The Applications of using AI for reading body language

To start, I’d really recommend watching this excellent TED Talk dubbed: Technology that knows what you’re feeling:

Health

The healthcare field will be fundamentally transformed by the application of artificial intelligence. Did you know that 50 percent of women who are told they have breast cancer actually are healthy? AI was developed that upped the accuracy of cancer diagnosis to 99 percent, and did it about 30 times faster than humans. Now, if that isn’t disruptive, I don’t know what is!

Google recently partnered with an eye care hospital where they applied AI to the time-consuming diagnosis of various eye diseases. From their post:

The time it takes to analyse these scans, combined with the sheer number of scans that healthcare professionals have to go through (more than 1,000 a day at Moorfields alone), can lead to lengthy delays between scan and treatment – even when someone needs urgent care. If they develop a sudden problem, such as a bleed at the back of the eye, these delays even could cost patients their eyesight.

The system we have developed seeks to address this challenge. Not only can it automatically detect the features of eye diseases in seconds, but it also can prioritise patients most in need of urgent care and recommend them for treatment. This instant triaging process should drastically cut down the time between the scan and treatment, helping sufferers of diabetic eye disease and age-related macular degeneration avoid sight loss.

Getting healthcare that is faster, more accurate and cheaper? That should be a win for everyone.

Safety & Security

Perhaps one of the greatest applications for AI in body language will be assisting and enhancing the intelligence and actions of safety and security professionals. These individuals have to make split second decisions that could mean life and death. They rely on training and finely tuned instincts, but they’re still human. If they get it wrong, people’s lives are in danger.

MIT already has developed wearable technology that uses AI to understand the tone of a conversation. Imagine a standoff between a hostage negotiator and criminal where you could monitor minute changes in voice tone and help steer the crisis to a peaceful outcome. Imagine a passport checkpoint where a border officer receives real time feedback regarding your nervousness and honesty.

Marketing

Today’s marketers love data. We’ve all seen the lengths Facebook will go to know ever more about its users so advertisers can serve more relevant ads. Imagine if they could serve you different ads based on your mood? You probably wouldn’t want to see certain kinds of ads when you’re angry and, likewise, advertisers probably would want to get in front of your eyeballs when you’re in a happy mood.

How could they possibly know this? Take a look at the device you’re using right now — it has a camera on it that is very capable of providing data about your facial expressions. Why not try it out yourself? The same technology could be used to see if you liked or disliked what you were watching, to automatically gauge your reaction to movies, politics, etc. Hopefully this is all done with your permission, of course. But the technology exists today to read your underlying reaction to anything you’re watching!

Try AI on yourself

I firmly believe AI is on the precipice of the big time, not a fad. So, I wanted to see how we might start using more and more AI here at Science of People. Let’s try a couple of tests and see what we come up with. I sat down with Vanessa and filmed a short video demo of two of Google’s APIs related to body language.

Determining emotions in our own photos

We’ve written quite a bit in the past about the importance of showing the right emotion in your profile photo. Using Google’s Vision API, there’s now some AI assistance to help you sort out the emotions you show in your profile photo, or any photo, for that matter.

Let’s go back to my example from above.

You can see how precisely Google has mapped the key points of my face, turning them into coordinates. Then it can determine the overall shape of my face, and interpret the emotions I’m exhibiting:

So, it nailed that. But how about something next level? If I inspect the full response Google’s API returned for this image, I find this entry:

"bestGuessLabels": [

{

"label": "vanessa van edwards husband",

"languageCode": "en"

}

Google not only knows who Vanessa is, or who I am, but it also knows we’re married! Now, if that isn’t Skynet, I’m not sure what is.

Extracting meaning from your written words

We also talk quite a bit about priming at Science of People. Google’s Natural Language API can help us look behind the words we use on a regular basis and see if we might inadvertently be priming for an unintentional emotion or entity.

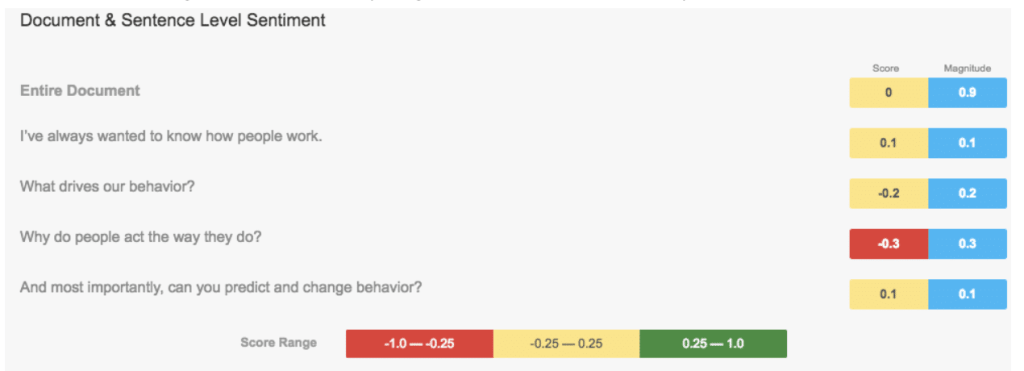

I grabbed the following copy from our About page and ran it through the API to see if we might be sending any unintended emotional subtext on that page.

I’ve always wanted to know how people work. What drives our behavior? Why do people act the way they do? And, most importantly, can you predict and change behavior?

Here’s what Google returned, analyzing each section of the copy for its sentiment.

You can see one sentence, “Why do people act the way they do?” was given a negative sentiment score. Taken out of context, this might have a negative connotation. Given its place amongst the rest of that copy, as well as the rest of that page (which I did not feed through the API), I think it’s still fine.

You might want to check your short bio on LinkedIn, your Twitter profile, or any other biography about yourself or your business to see if you send unintentionally negative signals with your word choices.

Crack The Code on Facial Expressions

The human face is constantly sending signals, and we use it to understand the person’s intentions when we speak to them.

In Decode, we dive deep into these microexpressions to teach you how to instantly pick up on them and understand the meaning behind what is said to you.

Don’t spend another day living in the dark.

Wrapping Up

Hopefully, you now have a better idea about how computers have evolved to read human body language, as well as many of the applications already in place, and those coming in the near future.

I want to ask you how you see AI influencing body language and human-computer interaction in the future. In particular, do you think there’s a tool or service Science of People could build to harness the immense power of AI and help you in your day to day life? Let us know on Twitter!